Ludovic Righetti (Project Leader),

Majid Khadiv,

Shahram Khorshidi,

Ahmad Gazar,

Nick Rotella,

Alexander Herzog

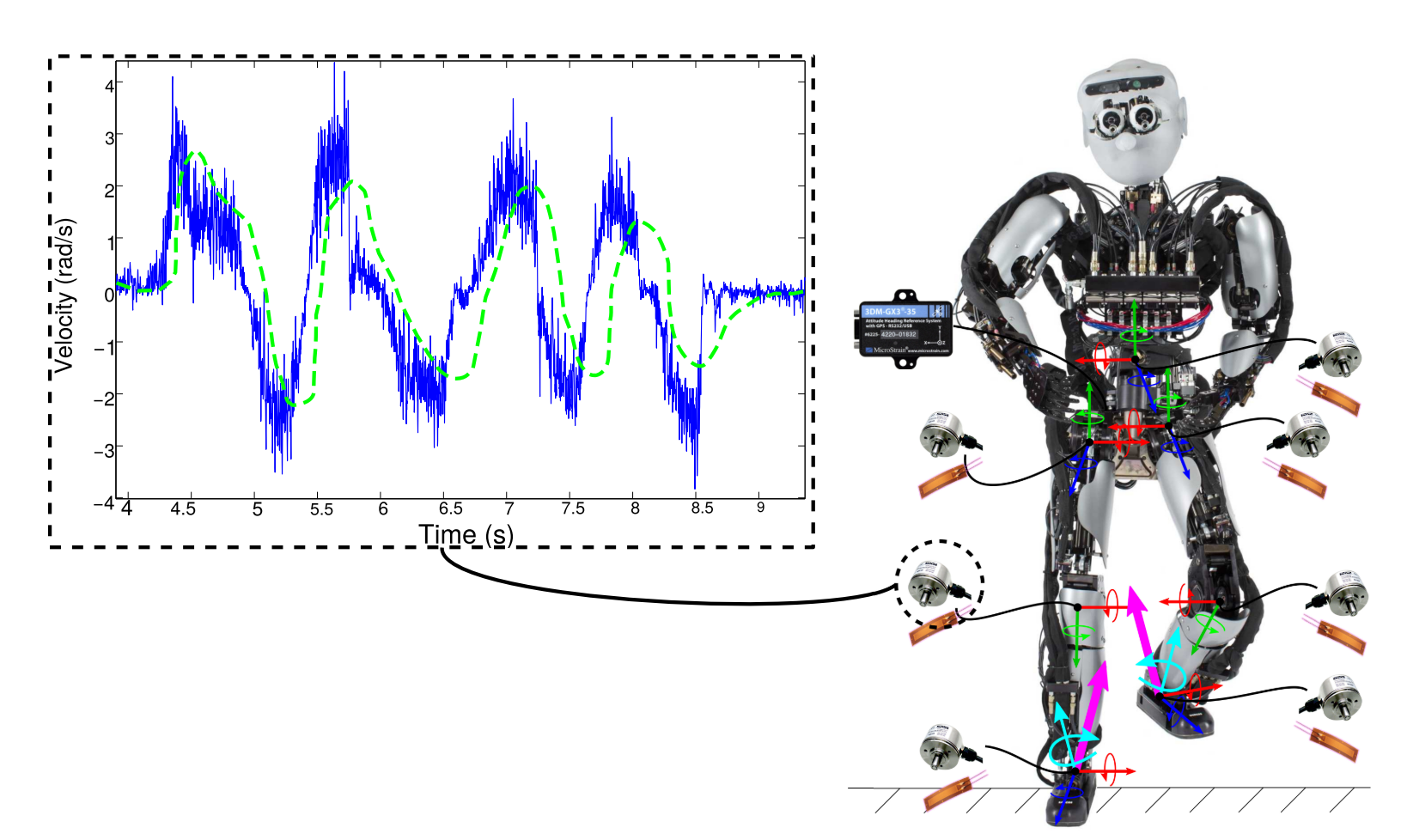

In this project, we explore the problem of fusing sensor information from inertial, position and force measurements to recover quantities fundamental for the feedback control of legged robots. Our final goal is to find a systematic way of fusing multiple sensor modalities to improve the control of legged robots.

- Our theoretical work studies the observability properties of nonlinear estimation models to better understand the fundamental limitations of sensor fusion approaches for legged robots

- We design novel estimators combining multiple sensor modalities (inertial, position and force) to provide accurate estimates of important quantities, such as the position and orientation of the robot in space, its center of mass and angular momentum or any external forces applied on the robot. We demonstrate through numerical simulation and real robot experiments that our estimators can significantly improve the control performance.

- We also investigate machine learning techniques to automatically extract sensory information, for example, to learn how to classify the contact states of a robot using unsupervised learning